projects

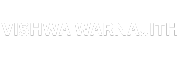

YOGS Twin Telescope Alignment System (Aug, 2025 – Present)

A robust data pipeline and automated alignment system for the York Observatory Group of Students’ twin telescopes, improving pointing precision and synchronisation for dual-instrument observations. The system performs real-time star detection, centroiding and cross-correlation-based alignment using Python and OpenCV, integrates camera control via ZWO ASI SDK, and ties telescope movement to PlaneWave control while logging telemetry and calibration data for post-analysis and reproducible observing.

Computer vision pipeline with Python, OpenCV and scikit-learn for star detection.

Camera integration via ZWO ASI Planetary Camera SDK and PlaneWave coordination.

Telemetry logging and calibration for analysis and synchronised observations.

YOGS Integrated Weather Data Acquisition System (July, 2025 – Aug, 2025)

Engineered a dual-program weather data acquisition system to enhance York Observatory Group of Students’ environmental monitoring and photon-density analysis. One program polls the Met Office Global Spot API every ten minutes; the other records high-frequency ISA weather station telemetry every four seconds. Centralised data merges irradiance and meteorological readings into a unified database for analysis, visualisation and improved observing decision-making.

Dual acquisition: Met Office API (10-minute) and ISA station (4-second) streams.

Centralised database integration using Python and SQL for combined analysis.

Enables correlation of weather data with photon density and observatory decisions.

YOGS Dome Human Detection & Safety System (July, 2025 – Aug, 2025)

A computer-vision safety system that detects human presence inside the observatory dome and triggers relay-based shutdown to prevent accidents during telescope movement. Built around a YOLO-inspired ML model integrated with OpenCV for real-time detection, the system signals hardware relays to power off dome mechanisms when a person is present, aligning technical implementation with risk assessment and embedded control best practices for observatory safety.

Real-time human detection with a YOLO-inspired model using OpenCV.

Relay-based hardware integration to shut down dome mechanisms on detection.

Designed to meet safety and risk-management requirements during operations.

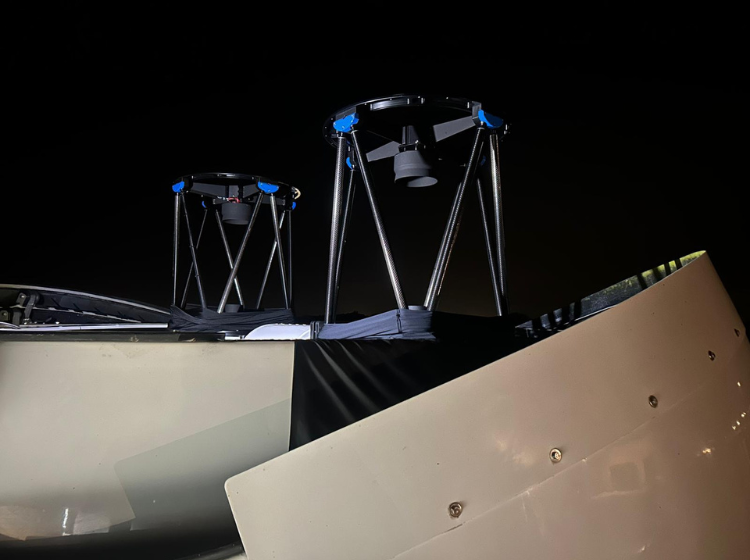

Raspberry Pi GUI for Medical Engineering Research (April, 2025 – July, 2025)

A Linux-optimised PyQt GUI wrapping existing Python code to simplify data acquisition for biomedical research. Deployed on Raspberry Pi 4 with Pi Camera, the interface enables non-programmer lab staff to capture brightness levels of bacterial pathogens and log observations efficiently. The project emphasises usability, reproducible workflows and integration with lab procedures while leveraging Raspberry Pi hardware for low-cost automation.

PyQt GUI on Raspberry Pi 4 for brightness data capture with Pi Camera.

Designed for non-programmer lab staff to streamline data collection.

Emphasis on usability, Linux compatibility and rapid prototyping with resin printing.

Project YES Mobile (July, 2025 – Present)

YES Mobile is a member-focused Android application for the York Engineering Society, designed to centralise engagement and operational tools. Features include user login, announcements, event calendars, discussion forums, a tech-help chatbot, resource library, mentorship directory, QR check-ins, and offline mode. Built with Android Studio and Firebase, UI workflows were prototyped in Figma while the Admin Panel facilitates society management and resilience in connectivity-limited environments.

Native Android app developed in Android Studio with Firebase backend.

Features: login, events, forum, chatbot, mentorship, QR check-ins and offline mode.

UI/UX prototyped in Figma; includes Admin Panel for society operations.

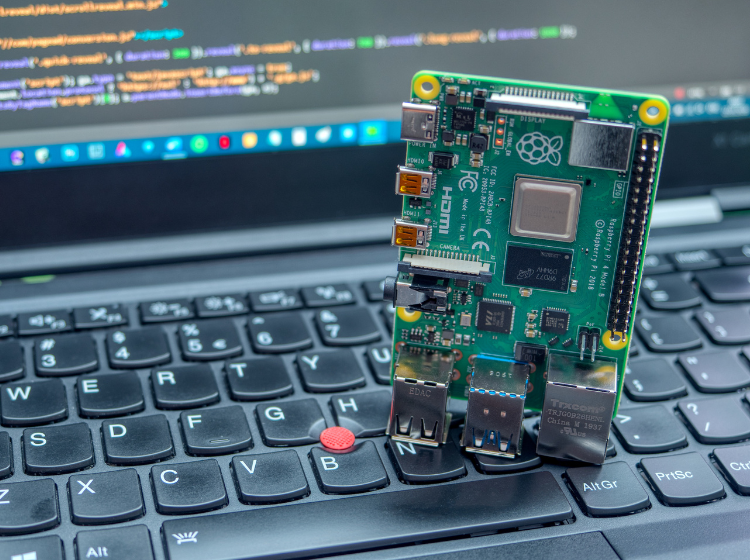

Project Ebortronics Gaming (Mimic console) (Feb, 2025 – June, 2025)

Ebortronics Gaming is a custom console developed by a four-person team to support cognitive skill development for autistic children through “Mimic”, a memory game. I acted as Project Lead, Project Manager and Firmware Developer, building two firmware versions in C++ for the Nucleo F303K8 microcontroller. The console uses joystick and button inputs, a 1602 LCD, customizable characters and a screen-time warning to balance engagement and safety.

Lead role: project management and firmware development on STM32 Nucleo F303K8.

Two software versions: structured and object-oriented C++ implementations.

Designed for cognitive skills training with input controls, LCD and safety features.

Project VersaDisplay (Nov, 2024 – Feb, 2025)

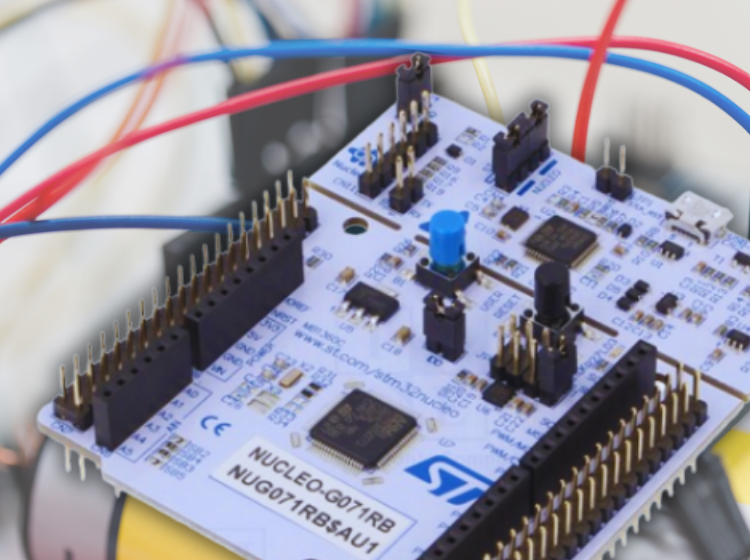

VersaDisplay is an embedded firmware project to control multiple seven-segment displays using a Nucleo G071RB microcontroller. The system applies concurrent and object-oriented programming, event handling and threading to deliver smooth real-time control. Designed for scalability, the firmware aims to drive displays using a single line of code, showcasing embedded C++ techniques, real-time control and efficient concurrent firmware design.

STM32 Nucleo G071RB microcontroller with embedded C++ and Keil Studio.

Concurrent programming, OOP, event and thread handling for real-time control.

Scalable API goal: drive multiple displays with minimal code footprint.

4-bit CPU Project

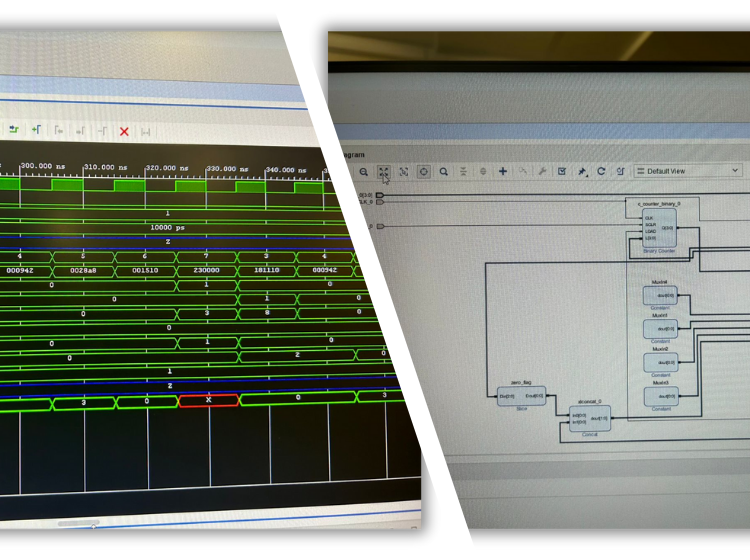

Designed and implemented a 4-bit CPU on a Zynq 7000 SoC FPGA using Xilinx Vivado and VHDL. The CPU includes a datapath, program counter, instruction memory, registers, shift register and a gate-level ALU supporting arithmetic, logic and shifts, managed by a 21-bit control word under a Harvard architecture. A VHDL testbench verified functionality, strengthening expertise in FPGA design and digital computer architecture.

FPGA implementation on Zynq 7000 SoC using Xilinx Vivado and VHDL.

Gate-level ALU, datapath, instruction memory and 21-bit control word (Harvard).

VHDL testbench validation and hands-on digital architecture experience.

Project Smart Diner (March, 2024 – July, 2024)

Smart Diner is a native Android application that streamlines restaurant management through menu management, order tracking and customer feedback. Built with Android Studio and Firebase, I contributed backend integration and UI/UX flows designed in Figma, managing the development process with Trello. The app emphasises usability and efficient data flows, demonstrating practical mobile development and cloud integration for small business operations.

Native Android development using Android Studio with Firebase backend.

Features include menu management, order tracking and feedback systems.

UI/UX designed in Figma; project managed with Trello for agile delivery.

“Line” – Error Measurement Application for Engineering Labs (Sep, 2025 – Present

“Line” is a desktop application built with PyQt to support first-year engineering labs by automating experimental error and confidence-limit calculations. It accepts raw data, computes best-fit lines and statistical metrics, and exports results to aid lab reporting. Designed from personal lab experience, the tool reduces manual calculation errors and accelerates report preparation while emphasising usability and pedagogical clarity for novice engineers.

Desktop app built with PyQt and Python for numerical computation.

Generates best-fit lines, confidence limits and error metrics from raw data.

Focus on usability and pedagogy to support first-year students.

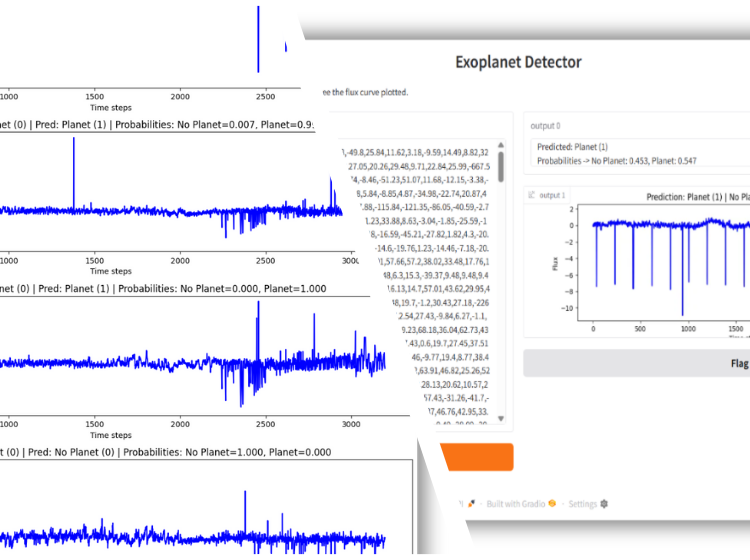

Project ExoTorch (NASA Space Apps Hackathon 2025)

ExoTorch is an end-to-end exoplanet detection pipeline developed during NASA Space Apps 2025. It trains an MLP model on Kepler light-curve data with class balancing techniques and weighted loss to handle rare events, evaluates performance with precision/recall metrics, and exposes inference through an interactive Gradio web app. The project demonstrates deep-learning for time-series analysis and accessible deployment for research and public exploration.

Deep-learning pipeline using PyTorch for Kepler light-curve exoplanet detection.

Methods: class balancing, WeightedRandomSampler and weighted loss functions.

Interactive Gradio web app for real-time prediction and visualization.

Skills, Tools, Outcomes

Embedded Systems

Practical experience across STM32, Raspberry Pi and FPGA platforms, delivering reliable firmware, real-time control and scalable embedded architectures for physical computing projects.

AI & Vision

Applied computer vision and machine learning with OpenCV, YOLO-inspired models and PyTorch to build detection, alignment and scientific analysis pipelines.

Apps & Interfaces

Built user-centred mobile and desktop applications with Android Studio, Firebase and PyQt, prioritising usability, offline resilience and clear data workflows.